References

General Language Model

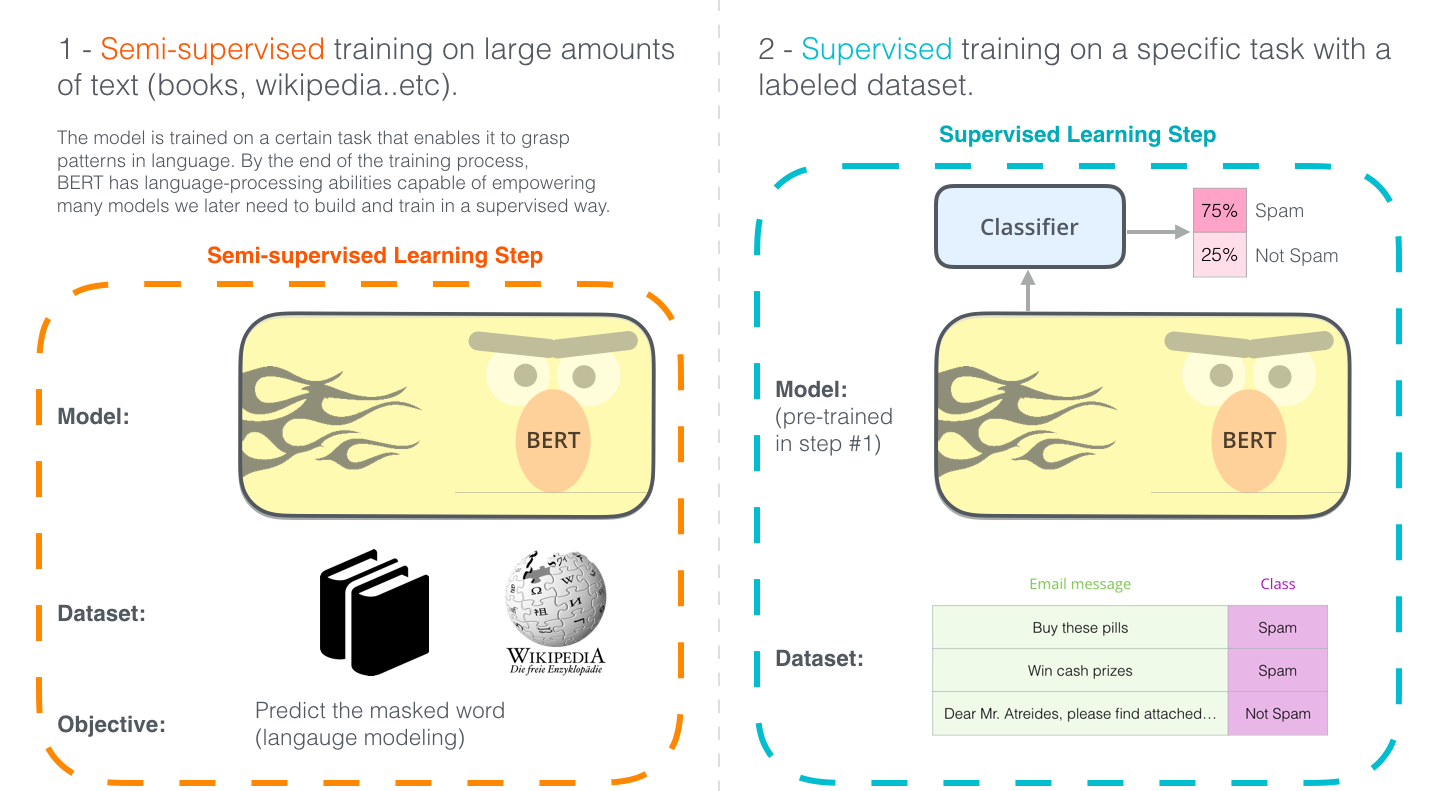

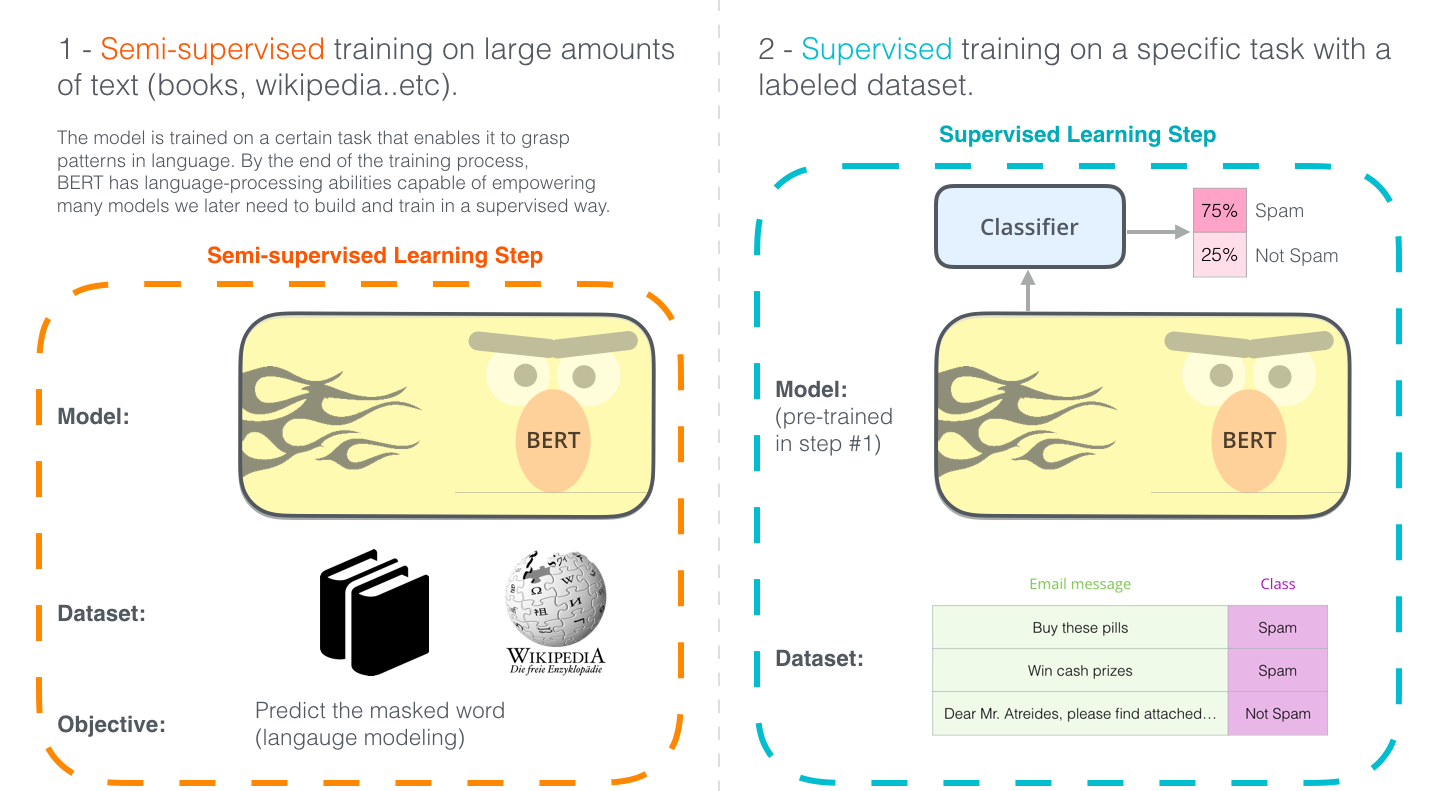

Goal : Build a general,pretrained language representation model

Why : The model can be adapted to various NLP tasks easily, we dont have to retain a new model from scratch every time

https://www.youtube.com/watch?v=BhlOGGzC0Q0

Context is everything!

- No context:

Word2vec

- Left-to-right context:

RNN

- Bidirectional context

BERT

Abstract

ref:https://jalammar.github.io/images/bert-transfer-learning.png

BERT → Bidirectional Encoder Representations from Transformers

- Bidirectional - Unlike previous models, that processed text either left-to-right or right-to-left, BERT reads the entire sentence at once, considering both the context before and after the word. this bidirectional context helps it capture more meaninig

- Generalizable - Pretrianed BERT model can be finetuned easily for downstream NLP task

- Encoder : Bert uses the encoder part of the transformer architecture, the encoder processes the input text, turining it into a set of contextualized word representations